Lang Chain Indexing: Simplifying Document Organization and Enhancing Search Results

What Indexing is?

In Langchain, indexing refers to the process of loading and organizing documents into a vector store. The indexing API allows you to efficiently add and synchronize documents from various sources into the vector store. The main purpose of indexing is to avoid duplicating content, re-writing unchanged content, and re-computing embeddings over unchanged content. This saves time, money, and improves vector search results. Langchain's indexing process involves computing hashes for each document and storing information such as the document hash, write time, and source ID in the record manager. Langchain offers different deletion modes for cleaning up existing documents in the vector store based on the behavior you desire.

Pre-requisites:

All you need is a Jupyter notebook and lang chain installed on your environment. Checkout the [Installation Instructions]

To get started with this project, you will need the following:

An OpenAI account with API access.

Basic knowledge of LangChain and its concepts.

Setup

Install Required Packages: Install langChain packages and openAI.

pip install langchain pip install openai

2. Configure Environment Variables: Create a .env file and store your environment variables.

pip install python-dotenv

Writing and Understanding the Code

• Import the necessary libraries:

import os

import openai

from dotenv import load_dotenv

from langchain.llms import OpenAI

from langchain.document_loaders import TextLoader

from langchain.indexes import VectorstoreIndexCreator

from langchain.chat_models import ChatOpenAI

Step 1: Load the OpenAI API key from the environment:

load_dotenv()

openai.api_key = os.getenv("OPENAI_API_KEY")

Step 2: Define the query you want to execute:

query = "I want an electrician for all the wiring fittings at home, Which electrician should I contact? List me the name of electricians"

Step 3: Load the text data using the TextLoader class:

loader = TextLoader(Sample_Data\list-of-names.txt")

text = loader.load()

Step 4: Create the vector index using the VectorstoreIndexCreator class:

index = VectorstoreIndexCreator().from_loaders([loader])

Step 5: Execute the query using the ChatOpenAI model:

results = index.query(query, llm=ChatOpenAI())

print(results)

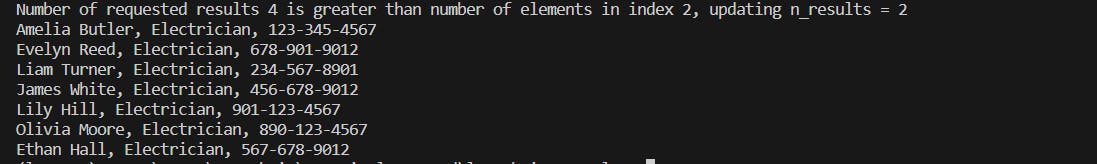

The output you provided is related to a list of electricians. Here is an explanation of the output:

• "Number of requested results 4 is greater than the number of elements in the index 2, updating n_results = 2": This message indicates that the user requested 4 results, but the index only contains 2 elements. As a result, the system updates the number of requested results to 2.

• The following lines represent the list of electricians and their contact information. Each line includes the electrician's name, their profession (electrician), and their phone number.

• Amelia Butler, Electrician, 123-345-4567

• Evelyn Reed, Electrician, 678-901-9012

• Liam Turner, Electrician, 234-567-8901

• James White, Electrician, 456-678-9012

• Lily Hill, Electrician, 901-123-4567

• Olivia Moore, Electrician, 890-123-4567

• Ethan Hall, Electrician, 567-678-9012

In summary, the output provides a list of electricians along with their contact information. The initial message indicates that the number of requested results was adjusted based on the available data in the index.

Conclusion:

In this blog post, we learned how to use Langchain and OpenAI to find solutions for your query. By leveraging the power of Langchain's indexing and OpenAI's language model, we can easily search for queries based on specific requirements. This code snippet provides a starting point for building more advanced search functionalities. Feel free to modify and customize it according to your needs.