Unleashing the Power of Lang Chain Embeddings: Efficient Text Processing and Analysis

What LangChain Embedding Tool is?

The LangChain Embedding Tool is a powerful feature of the LangChain framework that enables efficient text processing and analysis. It leverages various components and functionalities to facilitate tasks such as text loading, splitting, and retrieval. Here is an explanation of the LangChain Embedding Tool and its key components:

• Text Loading: The tool provides the capability to load text from various sources, such as text files or web pages. It utilizes the TextLoader module to handle the loading process, making it easy to import and process large volumes of text data.

• Text Splitting: To handle large text documents effectively, the CharacterTextSplitter module is employed. It splits the loaded text into smaller, manageable chunks, allowing for efficient processing and analysis. This is particularly useful when working with lengthy documents or when dealing with memory constraints.

• Embedding Creation: The LangChain Embedding Tool integrates with OpenAI's powerful language models to generate embeddings for the loaded text. The OpenAIEmbeddings module is utilized to create high-quality, context-aware embeddings that capture the semantic meaning of the text. These embeddings serve as numerical representations of the text, enabling various downstream tasks such as similarity matching, clustering, and information retrieval.

• Vector Storage: The tool incorporates Chroma, a vector storage module, to efficiently store and index the generated embeddings. Chroma enables fast and accurate retrieval of embeddings based on their similarity to a given query. This allows for quick and effective searching and retrieval of relevant information from the stored text data.

• RetrievalQA: The RetrievalQA module is utilized to build a retrieval-based question-answering system. It leverages the stored embeddings and vector indexing to retrieve the most relevant answers to user queries. This functionality is particularly useful when dealing with large collections of text data and enables efficient information retrieval.

Pre-Requisites:

All you need is a Jupyter notebook and lang chain installed on your environment. Checkout the [Installation Instructions]

To get started with this project, you will need the following:

An OpenAI account with API access.

Basic knowledge of LangChain and its concepts.

Setup

Install Required Packages: Install langChain packages and openAI.

pip install langchain pip install openai

2. Configure Environment Variables: Create a .env file and store your environment variables.

pip install python-dotenv

Writing and Understanding the Code:

Step 1: OpenAI and ChatOpenAI Models

from langchain import OpenAI

from langchain.chat_models import ChatOpenAI

Step 2: Loading and Splitting Text

from langchain.document_loaders import TextLoader

from langchain.text_splitter import CharacterTextSplitter

text_document_path = "\Sample_Data\list-of-names.txt"

loader = TextLoader(text_document_path)

documents = loader.load()

text_splitter = CharacterTextSplitter(chunk_size=1000, chunk_overlap=50)

texts = text_splitter.split_documents(documents)

Step 3: Creating Embeddings

from langchain.embeddings.openai import OpenAIEmbeddings

embeddings = OpenAIEmbeddings()

Step 4: RetrievalQA with Chroma

from langchain.vectorstores import Chroma

from langchain.chains import RetrievalQA

docsearch = Chroma.from_documents(texts, embeddings, collection_name="names-of-helpworkers")

names_of_helpers = RetrievalQA.from_chain_type(llm=llm, chain_type="stuff", retriever=docsearch.as_retriever())

Step 5: Initializing Tools

from langchain.agents import Tool

tools = [

Tool(

name="List of the electricians",

func=names_of_helpers.run,

description="useful for when you need to answer questions about the most relevant names of electricians good at wiring fittings .The list of electricians.",

),

]

Step 6: Initializing Agent

from langchain.agents import initialize_agent, AgentType

from langchain.chains.conversation.memory import ConversationBufferMemory

memory = ConversationBufferMemory(memory_key="chat_history")

agent_chain = initialize_agent(tools, llm, agent="conversational-react-description", memory=memory, verbose=True)

Step 7: Example Query and Output

query = """You are the operator of a highly advanced conversational chatbot named Agent.

Agent's primary function is to manage customer queries and provide relevant and useful information.

Agent is equipped with a vast database of information about various service providers for general household needs, such as electricians, plumbers, and more. Users interact with Agent to find suitable servicemen for their specific requirements..

Your task is to simulate the responses of Agent in a conversation, addressing.

"""

output = agent_chain.run(query)

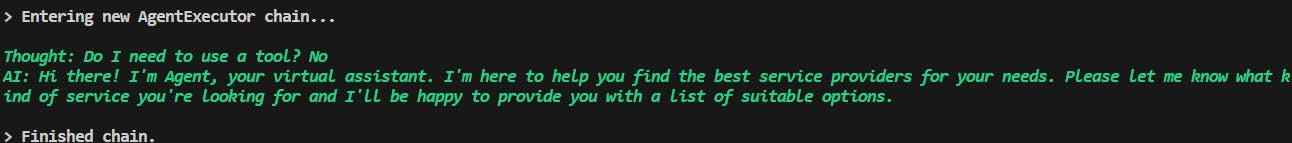

The output you provided is a conversation between an AI agent and a user. Here is an explanation of the output:

• "Entering new AgentExecutor chain...": This indicates the start of the agent's execution chain, where it processes and responds to user inputs.

• Thought: Do I need to use a tool? No: This represents the agent's thought process, where it considers whether it needs to use a tool to assist with the user's request. In this case, the agent determines that it does not require a tool.

• AI: Hi there! I'm Agent, your virtual assistant. I'm here to help you find the best service providers for your needs. Please let me know what kind of service you're looking for and I'll be happy to provide you with a list of suitable options.: This is the response generated by the AI agent. It introduces itself as "Agent" and explains its purpose of assisting the user in finding suitable service providers. It asks the user to specify the type of service they are looking for.

• "Finished chain.": This indicates the end of the agent's execution chain for this particular conversation.

Conclusion:

In conclusion, the LangChain Embedding Tool is a powerful and versatile feature within the LangChain framework that facilitates efficient text processing and analysis. By combining components such as text loading, splitting, embedding creation, vector storage, and retrieval-based question-answering, the tool enables users to handle large volumes of text data and retrieve relevant information based on user queries. The tool's ability to load text from various sources, such as text files or web pages, simplifies the process of importing and processing large amounts of text data. The text-splitting functionality allows for efficient handling of lengthy documents and helps overcome memory constraints. With the integration of OpenAI's language models, the tool generates high-quality, context-aware embeddings that capture the semantic meaning of the text. These embeddings serve as numerical representations of the text, enabling various downstream tasks such as similarity matching, clustering, and information retrieval. The vector storage component, Chroma, efficiently indexes and stores the generated embeddings, enabling fast and accurate retrieval based on similarity. This facilitates quick and effective searching and retrieval of relevant information from the stored text data. The RetrievalQA module further enhances the tool's capabilities by enabling the construction of retrieval-based question-answering systems. Leveraging the stored embeddings and vector indexing, the module retrieves the most relevant answers to user queries, making it valuable for tasks such as information retrieval and chatbot development. Overall, the LangChain Embedding Tool empowers users to leverage the power of language models and embeddings for efficient and effective text processing and analysis. It provides a comprehensive solution for handling text data, enabling users to load, split, analyze, and retrieve information with ease. With its versatility and wide range of applications, the LangChain Embedding Tool is a valuable asset for various use cases, including information retrieval, chatbots, document analysis, and more.